Understanding Scientific Research

Determining the best available evidence to support clinical treatment decisions relies on a knowledge of study design and study quality (1). Since the time of Hippocrates, medicine has struggled to balance the uncontrolled experience of healers with observations obtained by rigorous investigation of claims regarding the effects of health interventions (2).

Not all research is of sufficient quality to inform clinical decision making, therefore critical appraisal of the evidence is required by considering these major aspects (3):

- Study design – Is the study design appropriate to the outcomes measured?

- Study quality/validity i.e. detailed study methods and execution – Can the research be trusted?

- Consistency – Is there a similarity of effect across studies?

- Impact – Are the results clinically important?

- Applicability – Can the result be applied to your patient?

- Bias – Is there a risk of bias, including publication bias, confirmation bias, plausibility bias, expectation bias, commercial bias (who sponsored the study?)

Study designs

Available therapeutic literature can be broadly categorised as those studies of an observational nature and those studies that have a randomised experimental design (3).

The study design is critical in informing the reader about the relevance of the study to the question being addressed. The various research methods are described in Tables 1 - 3.

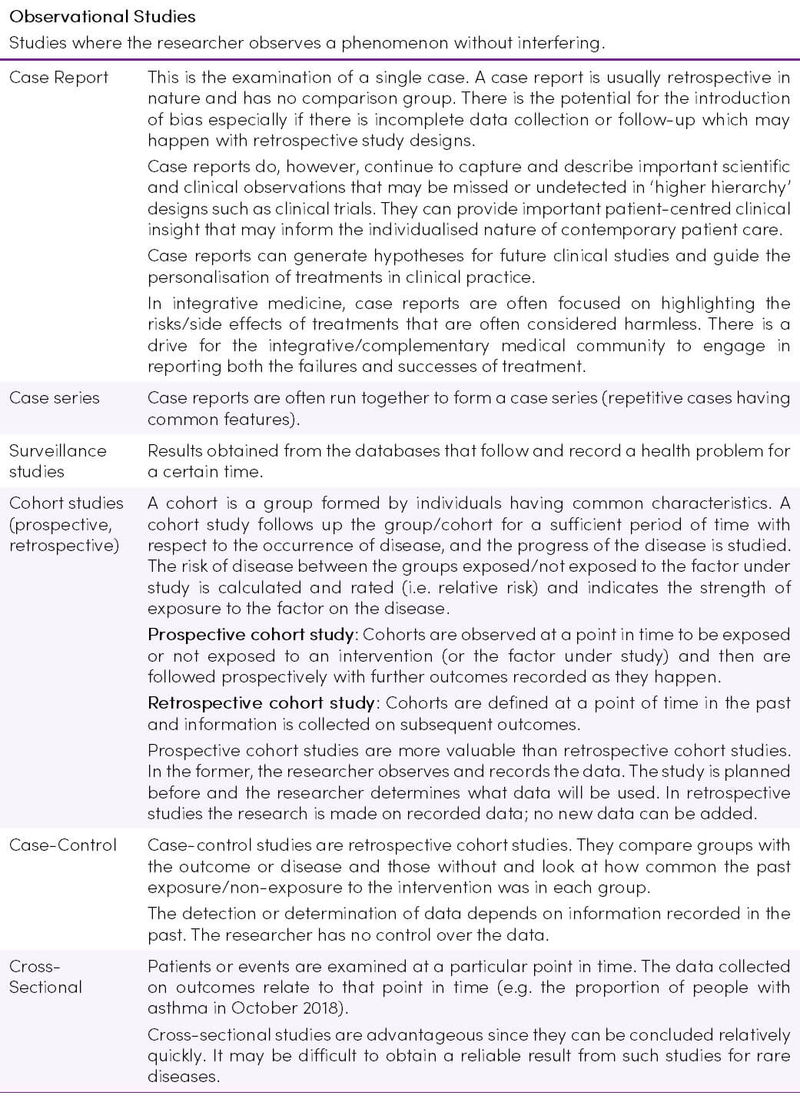

Table 1. Observational Study Designs (4–11)

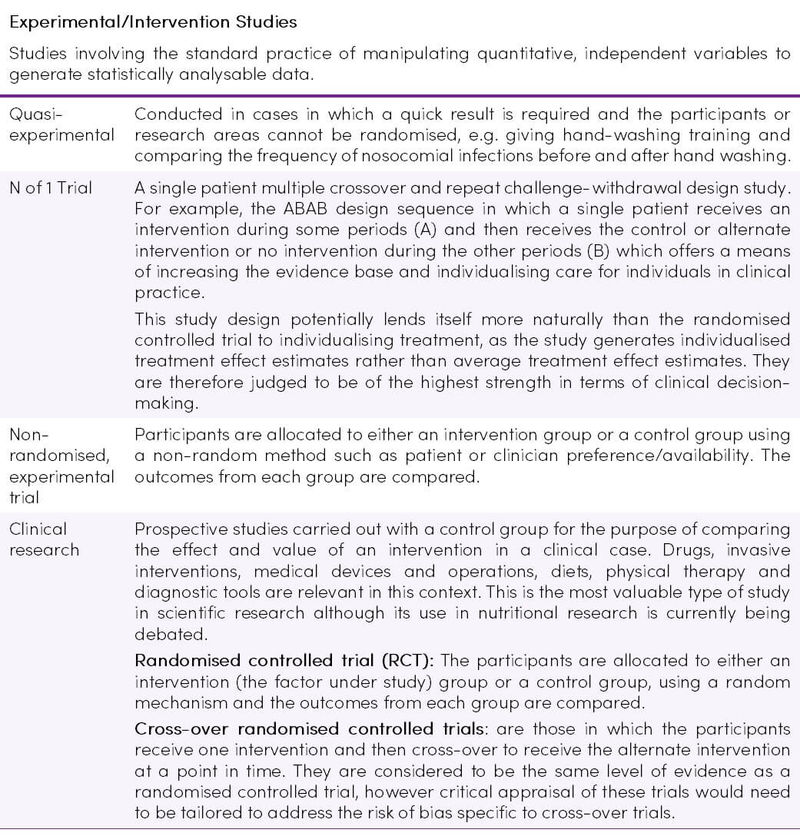

Table 2. Experimental/Intervention Study Designs (4–11)

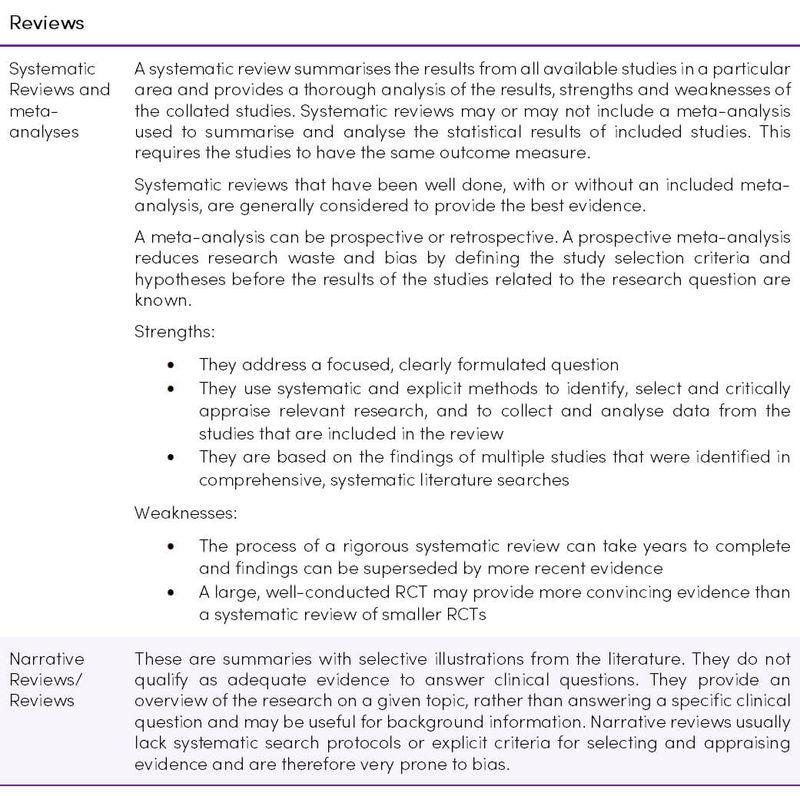

Hierarchy/Grade of Evidence

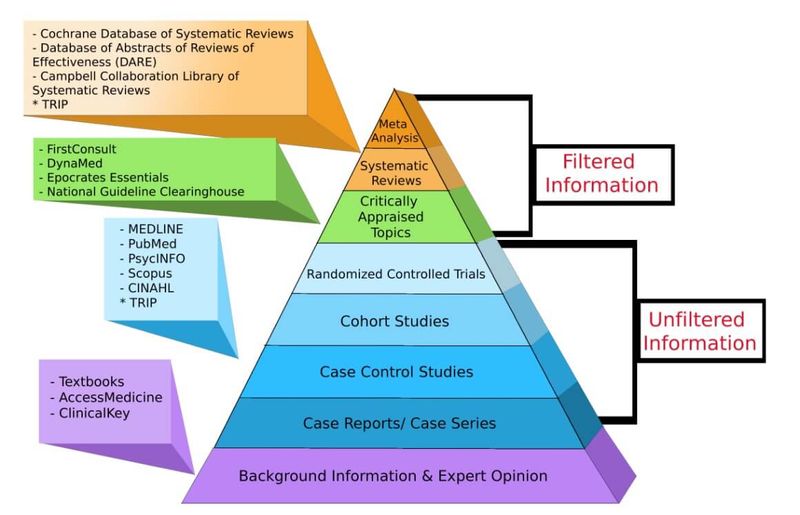

The first and earliest principle of evidence-based medicine indicates that not all evidence is the same, and a hierarchy of evidence exists (Figure 1) (13). Study designs in ascending levels of the pyramid generally exhibit increased quality of evidence and reduced risk of bias. Confidence in causal relations increases at the upper levels (14).

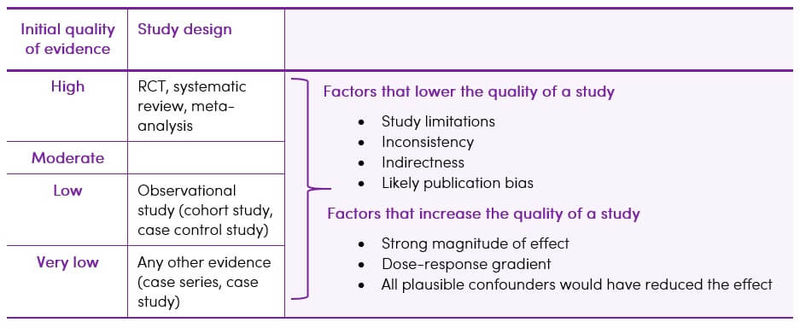

Another system of indicating study strength is the GRADE system (Table 4). GRADE provides a much more sophisticated hierarchy of evidence, which addresses all elements related to the credibility of the evidence: study design, risk of bias (study strengths and limitations), precision, consistency (variability in results between studies), directness (applicability), publication bias, magnitude of effect, and dose-response gradients (2).

Studies are rated as level A (High), level B (Moderate), level C (Low) and level D (Very Low). An initial Grade is applied based on the study design (as per the hierarchy pyramid) but is then adjusted up or down based on individual qualities of the study (1).

Figure 1. Hierarchy of evidence pyramid. Reproduced from (15) under the CC BY-NC-ND 3.0

Table 4. Quality of evidence: The GRADE system (16–19)

The best available evidence may not come from the optimal study type. For example, if treatment effects found in well-designed cohort studies are sufficiently large and consistent, this may provide more convincing evidence than the findings of a weaker RCT (5).

The P.I.C.O. Model

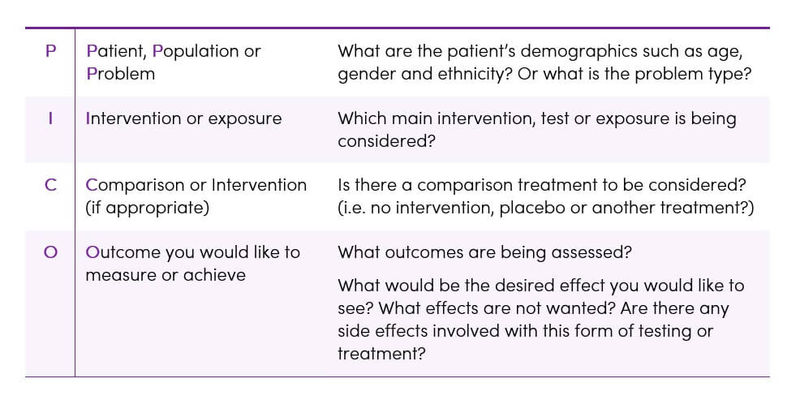

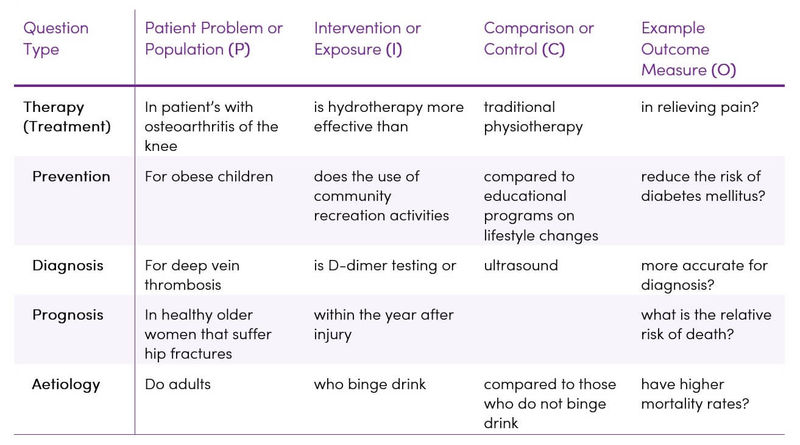

Determining the quality of evidence across studies depends on having a clearly defined question that is to be answered and considering all of the outcomes that are important to the patient/population group (3). Without a well-focused question, it can be very difficult and time-consuming to identify appropriate resources and search for relevant evidence. Practitioners of Evidence-Based Practice (EBP) often use a specialised framework called P.I.C.O. to define a clinical question in terms of the specific patient problem and facilitate the literature search (Table 5) (5).

Table 5. The P.I.C.O model for clinical questions (5)

Other factors to consider when forming the clinical question:

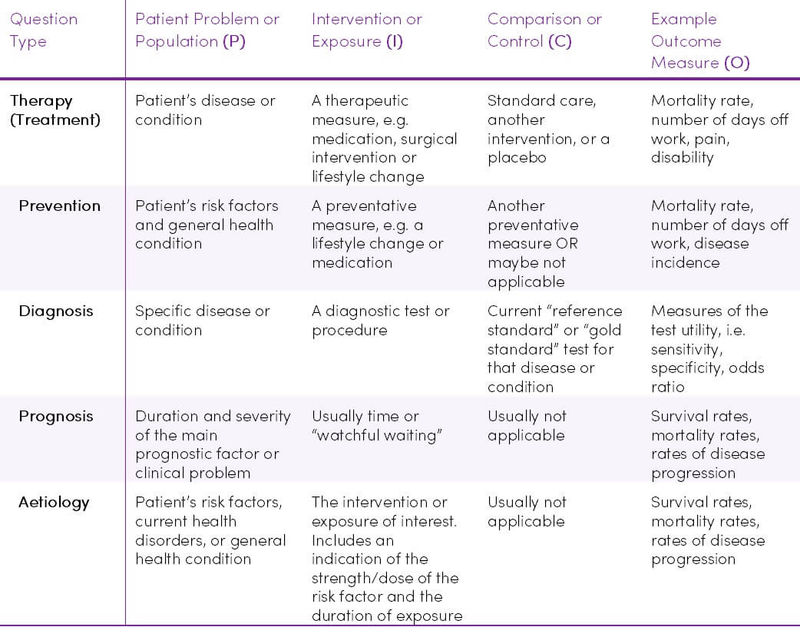

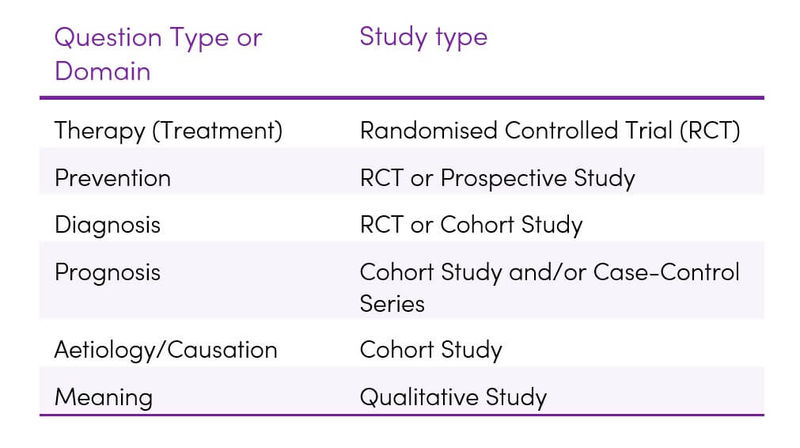

- What type of question are you asking? i.e. diagnosis, aetiology, therapy, prognosis, or prevention? (Table 6)

- What would be the best study design/methodology needed to answer the research question? (Table 7)

Table 6. Ways in which P.I.C.O. varies according to the type of question being asked (5)

Table 7. Optimal study methodologies for the main types of research questions (5)

Once the main elements of the question have been identified using the P.I.C.O. framework, it is easy to write a question statement to guide the literature search. Table 8 provides some examples.

Table 8. Examples of P.I.C.O. question statements (5)

Evaluating the literature

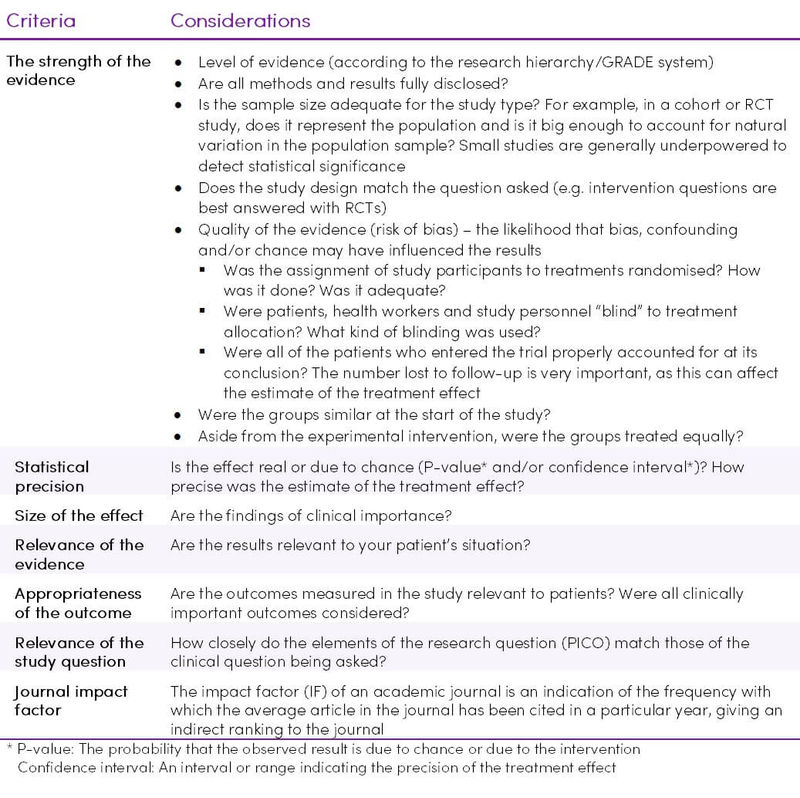

While conducting clinical research, errors can be introduced voluntarily or involuntarily at a number of stages, such as design, population selection, calculating the number of samples, non-compliance with the study protocol, data entry and selection of the statistical method. The rigour with which a study is conducted plays a role in how reliable the results may be (20,21). Not all case-control, cohort or randomised studies are done to the same standards and thus, if done multiple times, may have different results both due to chance or due to confounding variables and biases (1). Each study should be assessed according to the criteria in Table 9.

Bias and randomisation

Bias occurs when the researcher consciously or unconsciously influences the results in order to portray a certain outcome in line with their own decisions, views and ideological preferences (22). Types of bias include confirmation bias, plausibility bias, expectation bias, commercial bias.

RCTs have within them, by the nature of randomisation, an ability to help control bias (23). Bias can confound the outcome of a study such that the study may over or underestimate what the true treatment effect is. Studies of a more observational nature are by design more prone to bias than a randomised trial (1).

It is first necessary to determine how the randomisation was done. The most important concepts of randomisation are that allocation is concealed and that the allocation is truly random. If it is known to which group a patient will be randomised it may be possible to potentially influence their allocation (1).

Table 9. Criteria for evaluating scientific literature (1,4,5,24)

Applying the evidence

Applying the best evidence in practice, i.e. Evidence-Based Practice (EBP) requires a great deal of skill in synthesising the best scientific knowledge with clinical expertise and the patient's unique values and circumstances to reach a clinical decision (Figure 2).

Figure 2. Using clinical reasoning to integrating information in evidence-based practice (5)

.jpg)

Resources

Below are some of the commonly used online resources available for searching scientific literature. Some resources require paid subscriptions and others are open to the public.

- The Cochrane Database of Systematic Review

- EBSCO Host

- CINAHL (Cumulative Index for Nursing and Allied Health Literature) – Available through EBSCOHost

- EMBASE

- Medline – available through EBSCO Host

- PubMed

- Google Scholar

- Science Direct

The Cochrane Collaboration is an international voluntary organisation that prepares, maintains and promotes the accessibility of systematic reviews of the effects of healthcare.

The Cochrane Library is a database from the Cochrane Collaboration that allows simultaneous searching of six evidence-based practice databases. Cochrane Reviews are systematic reviews authored by members of the Cochrane Collaboration and available via The Cochrane Database of Systematic Reviews. They are widely recognised as the gold standard in systematic reviews due to the rigorous methodology used (5).

Takeaway on Understanding Scientific Research

- Having a thorough understanding of the different types of research and how to critically appraise each one is crucial for making good evidence-based clinical decisions.

- Identifying what specific question is to be answered by using a framework such as P.I.C.O., helps to target the literature search to find evidence which is applicable to your clinical question.